AI Risk Management

/ Addressing Threats & Vulnerabilities from Gen AI

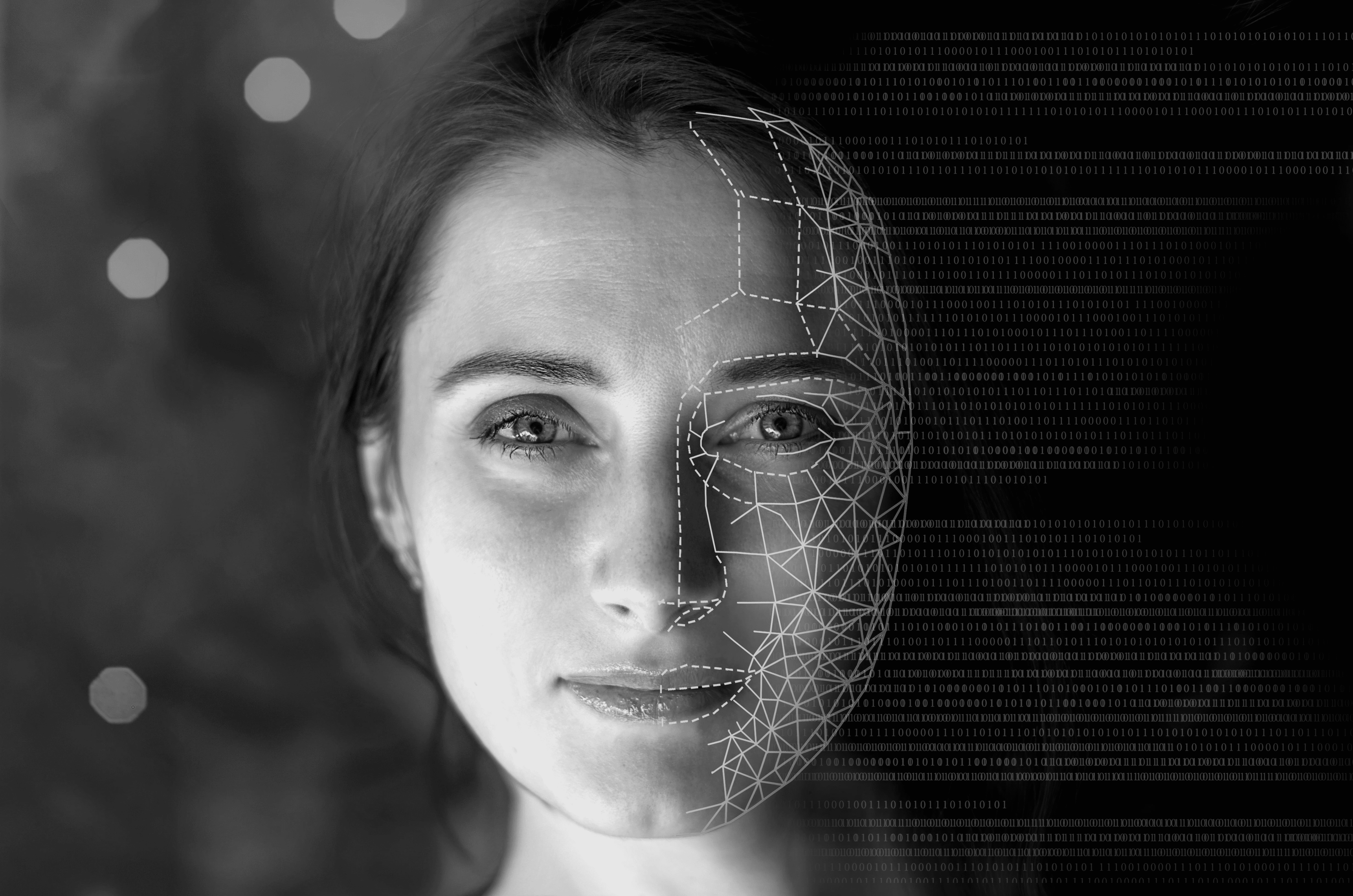

OpenAI’s Sora is making waves in the tech world. The Artificial Intelligence (AI) model, which can turn text prompts into realistic videos, will impact many different areas including entertainment, education, and marketing by creating script-to-screen movies, interactive learning materials, and personalized promotional videos. However, as with image-generating AI models like Dall-E, or other video-generating AI models, such as Runway or Pika, there lie dangers in the potential misuse.

For example, AI-generated videos can be used to manipulate public opinion through defamation and the spread of fake news. This can be dangerous not only for politicians and parties but also for businesses. Perpetrators could create fake interviews with CEOs to damage a company’s reputation or try to deceive customers and employees by using the faces and voices of existing employees to gain access to sensitive information. A study by Sensity found that deepfake technology is increasingly being used for malicious purposes, with a 330% increase in deepfake videos online.

The European Union [EU] AI Act aims to ensure that AI systems deployed in the EU are safe and respect fundamental rights and EU values by setting rules for the way AI is trained, designed and developed. Depending on the risk an AI system poses, according to the categorization developed by the EU, deployers are subject to different transparency obligations.

However, there are shortcomings, as the law focuses more on the technology itself than on how it will be used in practice. For example: As a Video generating AI system, Sora is classified as ‘limited risk’, even though it could be used in ways that by the EU are considered ‘unacceptable risks’ such as behavioral manipulation and exploitation of vulnerable characteristics of people. This makes it even more important that companies know how to protect themselves in the face of the exponentially increasing risk.

Figure 1

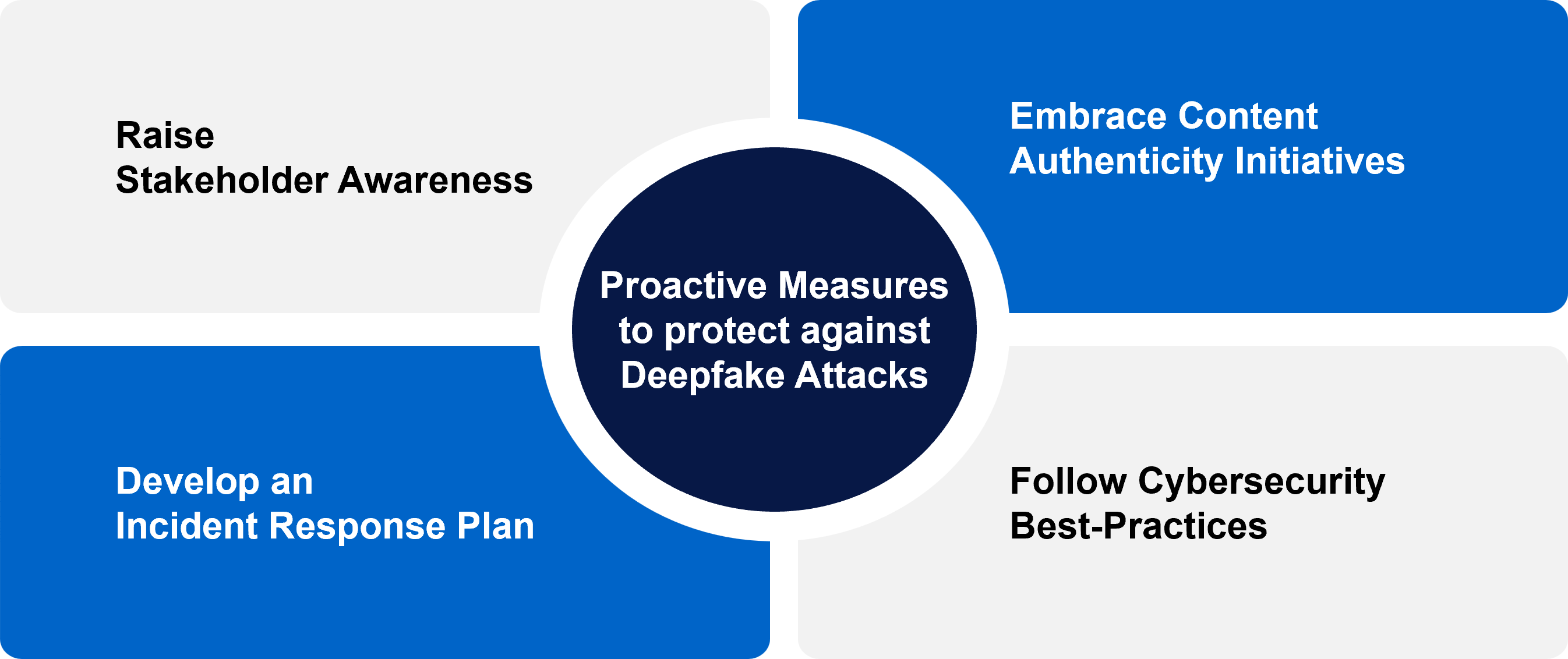

/ Four Ways of Addressing Threats & Vulnerabilities from Gen AI

Source: bluegain Analysis [2024]

/ HERE ARE 4 PROACTIVE MEASURES THAT CxOs CAN TAKE AGAINST SUCH THREATS

- Raise Stakeholder Awareness: Education is a powerful tool in the fight against harmful AI-generated videos. Companies need to make their employees, partners, and customers aware of the potential dangers. This includes training employees to verify the authenticity of sources and reporting practices and having a strong online presence that makes it easier for customers to distinguish between real and fake. In doing so, companies are empowering employees and stakeholders to identify and report suspicious content, which plays a critical role in mitigating the risks associated with AI-generated videos.

- Embrace Content Authenticity Initiatives: By joining the BigTech Content Authenticity Initiative (CAI) and the Coalition for Content Provenance and Authenticity (C2PA) standard, which is supported by Microsoft, Adobe, Intel, Google and the likes, you can enhance content integrity by embedding digital footprints and metadata into media assets. Such measures fortify transparency and trust, safeguarding against the manipulation of digital content and promoting ethical AI practices.

- Follow Cybersecurity Best Practices: Another important aspect of preventing these attacks is implementing strong authentication and access controls such as multi-factor authentication for sensitive accounts, regularly reviewing and updating user access privileges, and monitoring for unauthorized access attempts. There are tools, such as Sentinel or WeVerify, that can help detect AI-generated or altered videos both within the organization and on social media platforms online.

- Develop an Incident Response Plan: In the event of a deepfake incident, having a well-defined incident response plan is crucial. This plan should outline the steps to be taken in the event of a deepfake attack: Once the deepfake has been confirmed, contact social media platforms to remove the video and contain its spread. The source of the deepfake should be identified and eradicated to prevent further incidents. This could involve strengthening security protocols or taking legal action. Also, inform the public: Pre-approved communication templates can be prepared in advance to address media, employee or vendor concerns and released timely, to minimize the harm created by the fake video.

There are ways in which companies can protect themselves and their stakeholders from the potential dangers of deepfake attacks. A higher level of digital maturity and a strong online presence that acts as a visible counterpart to deepfakes and enables companies to communicate digitally with stakeholders play a central role in this.

Now is the time to take proactive steps to protect stakeholders, reinforce ethical AI practices, and lead responsibly in the evolving AI landscape.

/ ABOUT THE AUTHOR

- Dr. Carsten Linz is the CEO of bluegain. Formerly Group Digital Officer at BASF and Business Development Officer at SAP, he is known for building €100 million businesses and leading large-scale transformations affecting 60,000+ employees. He is represented on boards including Shareability’s Technology & Innovation Committee and Social Impact. A member of the World Economic Forum’s Expert Network, Dr. Linz is also a published author who shares his expertise as an educator in executive programs at top business schools.

/ DOWNLOAD WHITEPAPER

Empowering you with knowledge is our priority. Explore our collection of well-thought-out whitepapers available for download. Should you have any questions or wish to explore further, our team is here to assist you.

download

back to Article

back to Article